|

Timothy

0.9

Tissue Modelling Framework

|

|

Timothy

0.9

Tissue Modelling Framework

|

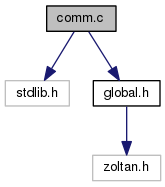

contains communication functions More...

Go to the source code of this file.

Data Structures | |

| struct | expData |

Macros | |

| #define | MAX_EXPORTED_PER_PROC 2*maxCellsPerProc |

Functions | |

| int | comm_compare_exp_list (const void *a, const void *b) |

| void | createExportList () |

| void | commCleanup () |

| void | cellsExchangeInit () |

| void | cellsExchangeWait () |

| void | densPotExchangeInit () |

| void | densPotExchangeWait () |

Variables | |

| MPI_Request * | reqSend |

| MPI_Request * | reqRecv |

| int64_t * | sendOffset |

| int64_t * | recvOffset |

| struct expData * | expList |

| int * | recvCount |

| int * | sendCount |

contains communication functions

Definition in file comm.c.

| #define MAX_EXPORTED_PER_PROC 2*maxCellsPerProc |

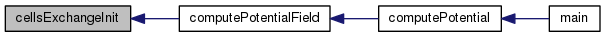

| void cellsExchangeInit | ( | ) |

This function initiate sending and receiving cells' data between processes.

Definition at line 177 of file comm.c.

References expData::cell, cells, partData::h, h, lnc, MPIrank, MPIsize, nc, numExp, numImp, recvCount, recvData, recvOffset, reqRecv, reqSend, sendCount, sendData, sendOffset, cellData::size, partData::size, tlnc, cellData::x, partData::x, cellData::y, partData::y, partData::young, cellData::z, and partData::z.

| void cellsExchangeWait | ( | ) |

| int comm_compare_exp_list | ( | const void * | a, |

| const void * | b | ||

| ) |

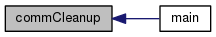

| void commCleanup | ( | ) |

This function deallocates all communication buffers and auxiliary tables.

Definition at line 156 of file comm.c.

References MPIsize, nc, recvCount, recvData, recvDensPotData, recvOffset, sendCount, and sendOffset.

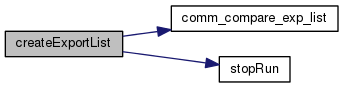

| void createExportList | ( | ) |

This function uses Zoltan's library function Zoltan_LB_Box_Assign to find possible intersections of cells' neighbourhoods and other processes' geometries.

Definition at line 64 of file comm.c.

References expData::cell, cells, comm_compare_exp_list(), h, cellData::halo, lnc, MAX_EXPORTED_PER_PROC, MPIrank, MPIsize, nc, numExp, numImp, expData::proc, recvCount, recvOffset, sdim, sendCount, sendOffset, stopRun(), tlnc, cellData::x, cellData::y, cellData::z, and ztn.

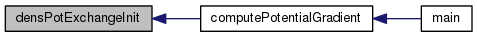

| void densPotExchangeInit | ( | ) |

This function initiate sending and receiving density and potential values between processes.

Definition at line 257 of file comm.c.

References expData::cell, cells, cellData::density, densPotData::density, lnc, MPIrank, MPIsize, nc, numExp, numImp, recvCount, recvDensPotData, recvOffset, reqRecv, reqSend, sendCount, sendDensPotData, sendOffset, tlnc, cellData::v, and densPotData::v.

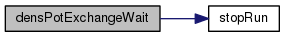

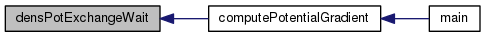

| void densPotExchangeWait | ( | ) |

This function waits for density and potential data exchange completion.

Definition at line 301 of file comm.c.

References lnc, MPIsize, nc, recvCount, reqRecv, reqSend, sendCount, sendDensPotData, stopRun(), and tlnc.