|

Timothy

0.9

Tissue Modelling Framework

|

|

Timothy

0.9

Tissue Modelling Framework

|

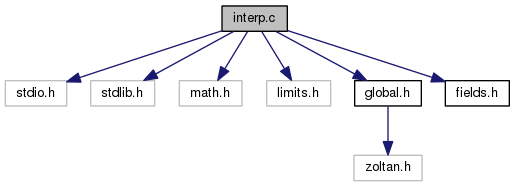

contains grid to cellular data interpolation functions More...

#include <stdio.h>#include <stdlib.h>#include <math.h>#include <limits.h>#include "global.h"#include "fields.h"

Go to the source code of this file.

Macros | |

| #define | patch(p, i, j, k) (cicPatch[p][patchSize[p].y*patchSize[p].z*i+patchSize[p].z*j+k]) |

Functions | |

| void | findPatches () |

| void | doInterpolation () |

| void | initPatchExchange () |

| int | waitPatchExchange () |

| int | applyPatches () |

| void | initFieldsPatchesExchange () |

| void | waitFieldsPatchesExchange () |

| void | applyFieldsPatches () |

| void | interpolateCellsToGrid () |

| void | initCellsToGridExchange () |

| void | waitCellsToGridExchange () |

| void | interpolateFieldsToCells () |

Variables | |

| double ** | cicPatch |

| int * | cicIntersect |

| int * | cicReceiver |

| int * | cicSender |

| double ** | cicRecvPatch |

| MPI_Request * | cicReqSend |

| MPI_Request * | cicReqRecv |

| int * | recvP |

| struct int64Vector3d * | lowerPatchCorner |

| struct int64Vector3d * | upperPatchCorner |

| struct int64Vector3d * | lowerPatchCornerR |

| struct int64Vector3d * | upperPatchCornerR |

| struct int64Vector3d * | patchSize |

| struct int64Vector3d * | patchSizeR |

contains grid to cellular data interpolation functions

Definition in file interp.c.

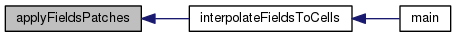

| void applyFieldsPatches | ( | ) |

Update local cell fields with information from remote processes received in patches. Receiveing field patches are deallocated here.

Definition at line 509 of file interp.c.

References cellFields, cells, cicReceiver, fieldsPatches, gridEndIdx, gridResolution, gridStartIdx, lnc, MPIsize, NFIELDS, x, cellData::x, doubleVector3d::x, int64Vector3d::x, cellData::y, doubleVector3d::y, int64Vector3d::y, cellData::z, doubleVector3d::z, and int64Vector3d::z.

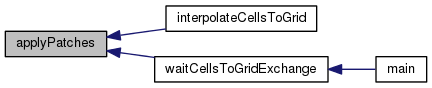

| int applyPatches | ( | ) |

Update local densityField part with information from remote processes received in patches. Receiveing patches are deallocated here.

Definition at line 338 of file interp.c.

References cicIntersect, cicPatch, cicRecvPatch, cicSender, densityField, gridEndIdx, gridSize, gridStartIdx, MPIrank, MPIsize, x, int64Vector3d::x, doubleVector3d::y, int64Vector3d::y, doubleVector3d::z, and int64Vector3d::z.

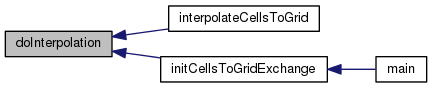

| void doInterpolation | ( | ) |

For each local cell its density value is interpolated accross neighbouring grid vertices with the use of Cloud-In-Cell method. Computed values are stored in patches instead in field buffers. No additional memory allocations are made here.

Definition at line 181 of file interp.c.

References cells, gridEndIdx, gridResolution, gridStartIdx, lnc, MPIsize, patch, x, cellData::x, doubleVector3d::x, int64Vector3d::x, cellData::y, doubleVector3d::y, int64Vector3d::y, cellData::z, doubleVector3d::z, and int64Vector3d::z.

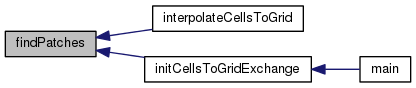

| void findPatches | ( | ) |

For each local cell we check its position in the grid. If cell is located in the grid partition of other process than the information about this cell should be send to remote process by MPI communication. This is done by preparing special patches and sending the information on all bunch of cells rather than sendind it one by one. This function identifies patches and allocate memory buffers for patches.

Definition at line 60 of file interp.c.

References cells, cicIntersect, cicPatch, gridEndIdx, gridResolution, gridStartIdx, lnc, MPIsize, sdim, x, cellData::x, int64Vector3d::x, cellData::y, int64Vector3d::y, cellData::z, and int64Vector3d::z.

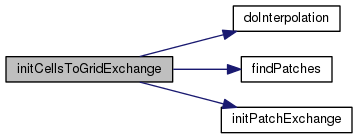

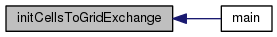

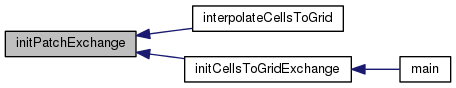

| void initCellsToGridExchange | ( | ) |

This function initializes data exchange between processes required in cells-to-grid interpolation. This function enables overlapping communication and computations.

Definition at line 598 of file interp.c.

References doInterpolation(), findPatches(), gfields, and initPatchExchange().

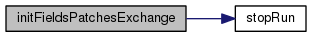

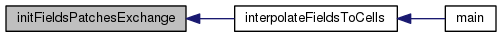

| void initFieldsPatchesExchange | ( | ) |

Now each local cell should receive information about values of global fields in the grid. Field patches are filled with appropriate values. Non-blocking communication is initiated. This is executed after global fields are computed. Field patches buffers are allocated here. Sizes of the patches are the same as those from previous CIC communication. Receiving field patches are also allocated here. MPI_Request tables are allocated here.

Definition at line 398 of file interp.c.

References cicReceiver, cicReqRecv, cicReqSend, cicSender, fieldAddr, fieldsPatches, fieldsPatchesCommBuff, gridSize, gridStartIdx, MPIrank, MPIsize, NFIELDS, stopRun(), x, int64Vector3d::x, int64Vector3d::y, and int64Vector3d::z.

| void initPatchExchange | ( | ) |

Patches are being sent to receiving processes with non-blocking communication scheme (Isend, Irecv). Receiving patches are allocated here. MPI_Request tables are allocated here.

Definition at line 259 of file interp.c.

References cicIntersect, cicPatch, cicReceiver, cicRecvPatch, cicReqRecv, cicReqSend, cicSender, MPIrank, MPIsize, x, int64Vector3d::x, doubleVector3d::y, doubleVector3d::z, and int64Vector3d::z.

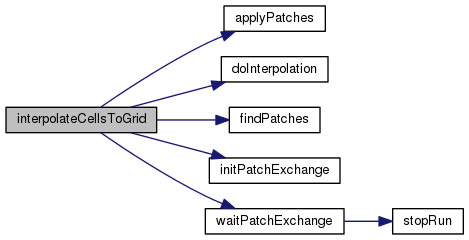

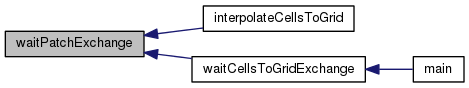

| void interpolateCellsToGrid | ( | ) |

This is a driving function interpolating cellular data to grid data. This function does not enable overlapping communication and computations.

Definition at line 583 of file interp.c.

References applyPatches(), doInterpolation(), findPatches(), initPatchExchange(), and waitPatchExchange().

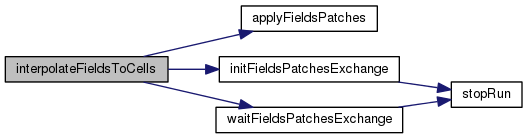

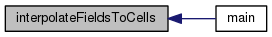

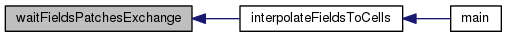

| void interpolateFieldsToCells | ( | ) |

This function is used to interpolate field data back to cells. Overlapping of communication and computations is not implemented here. This function deallocates all important arrays used in interpolation.

Definition at line 627 of file interp.c.

References applyFieldsPatches(), cicIntersect, cicReceiver, cicSender, gfields, initFieldsPatchesExchange(), and waitFieldsPatchesExchange().

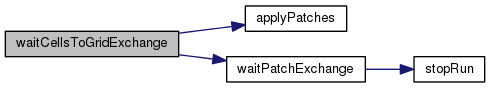

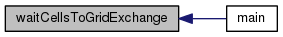

| void waitCellsToGridExchange | ( | ) |

This function wait for patches communication to finish in cells-to-grid interpolation. This function enables overlapping communication and computations.

Definition at line 613 of file interp.c.

References applyPatches(), gfields, and waitPatchExchange().

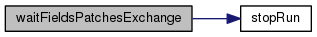

| void waitFieldsPatchesExchange | ( | ) |

Wait for communication to finish. MPI_Request tables are deallocated here. Field patches buffers are deallocated here.

Definition at line 475 of file interp.c.

References cicReceiver, cicReqRecv, cicReqSend, cicSender, fieldsPatchesCommBuff, MPIsize, and stopRun().

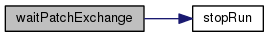

| int waitPatchExchange | ( | ) |

Wait for communication to finish. MPI_Request tables are deallocated here.

Definition at line 309 of file interp.c.

References cicReceiver, cicReqRecv, cicReqSend, cicSender, MPIsize, and stopRun().

| struct int64Vector3d* lowerPatchCorner |

| struct int64Vector3d* lowerPatchCornerR |

| struct int64Vector3d* patchSize |

| struct int64Vector3d* patchSizeR |

| struct int64Vector3d * upperPatchCorner |

| struct int64Vector3d * upperPatchCornerR |